When a visitor arrives on your site, it's your responsibility to provide an excellent experience—both because it's your job and because it's the best way to drive conversions.

A/B testing is a great place to start.

If you're unfamiliar with the A/B testing process, it's a data-driven way to learn what resonates with your site visitors. With the information you get from A/B testing, you can provide a better site experience and boost your business' chance to grow.

In this article, you'll learn exactly what A/B testing means in digital marketing, what you should test, how to manage the A/B testing process from beginning to end, the metrics you should track, and a few real examples of A/B tests to inspire you.

What is A/B testing?

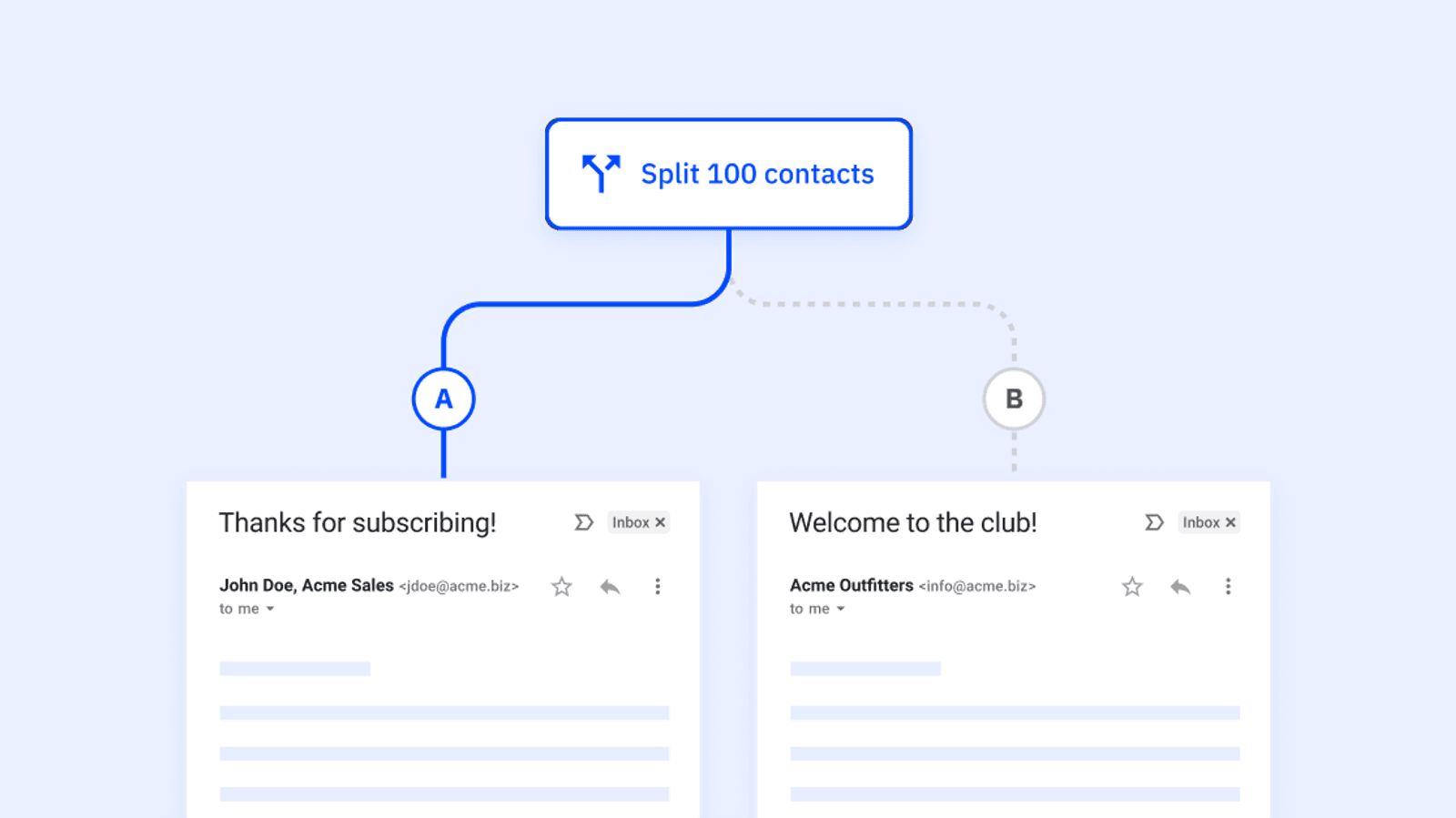

A/B testing (also called split or bucket testing) compares two variations of a content asset (such as a webpage, email, or ad) to see which one drives better results.

By evenly dividing traffic and tracking key metrics (like clicks or conversions), you pinpoint the more effective version and optimize future campaigns accordingly. Once you have your preferred variation, you send 100% of your traffic to that variation and remove the other — confident you're now offering a more optimized user experience for your visitors.

A/B testing helps you better understand what your users or visitors want so you can deliver it to them and encourage a result.

A common example is modifying landing pages to see which design results in higher conversions. The variation could be as simple as testing a headline or header image to see how users respond. The aim is to see which of the different versions is more popular with your customers.

When should you A/B test (& why)?

There's no single answer to this question.

A/B testing aims to understand user behavior, improve the user experience, and increase engagement. This means there are a variety of situations where A/B testing can be put to good use.

1. To identify visitor pain points

If you want to know why your conversion rate isn't increasing or how to improve your click-through rate, you need to identify any pain points. This is where A/B testing can help. It allows you to find areas where visitors struggle on your website.

Imagine you have a high cart abandonment rate. To find out why visitors are abandoning ship, you run an A/B test.

You suspect (the hypothesis of your A/B test) that users might struggle with how long the checkout process is. So, alongside your original checkout process, you create a shorter version, Variation B. In this case, Variation A, or the original version, would be considered the control version.

You send 50% of your traffic through your original checkout process and 50% through your new one.

The results confirm what you thought: Users prefer the shorter option. Your checkout completion rates increase by 17% over the course of the test's run.

By running the A/B test, you identified the hurdle consumers were facing and can now make the necessary changes to improve the customer experience going forward (and hopefully increase conversions, too). Running A/B tests like this can majorly boost your confidence level when creating copy for a new campaign.

2. To reduce bounce rates and increase engagement

A/B testing is also a great way to make sure your written content appeals to your target audience. You can find out what your visitors are looking for, how they want to navigate your blog or software, and what they're likely to engage with.

As a result, users will spend less time bouncing away from your site and more time engaging with your content or email campaigns.

3. To see statistically significant improvements

When you A/B test an email subject line, landing page copy, or a paid ad, you don't come away with maybes or "I guess so"s. Because this type of experimentation is fully reliant on data, there is a clear answer or "winner" when the test is complete.

By A/B testing, you'll see statistically significant improvements in metrics like click-through rates, email engagement rates, time spent on the page, cart abandonment rate, and CTA clicks. This can drastically improve your confidence level as you continue to run tests on different variables.

4. To drive higher return on investment (ROI) from campaigns

By running A/B tests on your digital marketing or advertising campaigns, you have a higher chance of increasing your ROI.

Let's say you're planning a high-investment email marketing campaign around the holiday season. Before you launch, you run an A/B test on your standard newsletter layout to see which performs better.

With the results from this test, you know how to structure your emails when the campaign goes live. You know what works best, so you're likely to see better results. Similarly, you can also test things like email subject lines and color schemes to determine what messaging and design your audience will most likely engage with.

Comparing different types of A/B testing

There are multiple types of A/B testing, each serving its own purpose. These types are A/B testing itself, split testing, multivariate testing, and multipage testing. Let's look at how each of these methods is different.

Split testing

A/B testing and split testing are terms often used interchangeably, but they generally refer to the same concept in the context of experimentation and optimization. Both methods involve comparing two or more variations of a webpage, email, or other element to determine which performs better.

However, there might be subtle differences in how people use these terms:

A/B testing

- A/B testing typically refers to a simple comparison between two versions, A and B, to see which one yields better results.

- It's a controlled experiment where the original version (A) is compared against a single variation (B) to measure the impact on a specific metric.

Split testing

- Split testing can be a broader term that encompasses more than just two variations. It involves splitting the audience into different groups and testing multiple versions simultaneously.

- While A/B testing is a specific case of split testing (with only two variations), split testing can involve A/B/C testing, A/B/C/D testing, and so on.

Multivariate testing

Multivariate testing is a form of experimentation where multiple variations of different elements within a webpage, email, or other content are simultaneously tested to determine the optimal combination. In contrast to A/B testing or simple split testing, multivariate testing allows you to test changes in multiple variables at the same time.

Key features of multivariate testing include:

- Multiple variations: This involves testing multiple variations of different elements (such as headlines, images, and call-to-action buttons) within a single experiment.

- Combinations: The goal is to understand not only which individual elements perform best but also which combinations of these elements result in the most effective overall outcome.

- Complex analysis: Due to the increased number of variables and combinations, multivariate testing requires more complex statistical analysis compared to A/B testing.

- Resource intensive: Implementing and analyzing multivariate tests can be more resource-intensive than simpler A/B tests, as they involve tracking and analyzing a larger number of variations.

While multivariate testing provides a more comprehensive understanding of how different elements interact, it may not be suitable for all situations. It's particularly useful when you want to optimize multiple design aspects simultaneously and understand the synergies between different elements.

Multipage testing

Multipage testing, also known as multipage experimentation, is used in web development and online marketing to compare the performance of different versions of a website across multiple pages.

In a multipage testing scenario, variations of web pages are created with different elements, such as layouts, headlines, images, colors, or calls-to-action. These variations are then presented to different segments of website visitors, and their interactions and behaviors are measured and analyzed.

This type of testing helps you make data-driven decisions about website design and content that will improve user experience and achieve your unique business goals.

A/B testing examples: What can you A/B test?

If we were to answer this question in full, the list would be pretty long. There's seemingly no limit to what elements you can test against each other, such as different phrasing of copy, different design options, and different CTA destinations.

To give you some idea of what you can test (and to save you from a never-ending list), we've covered some of the most popular areas.

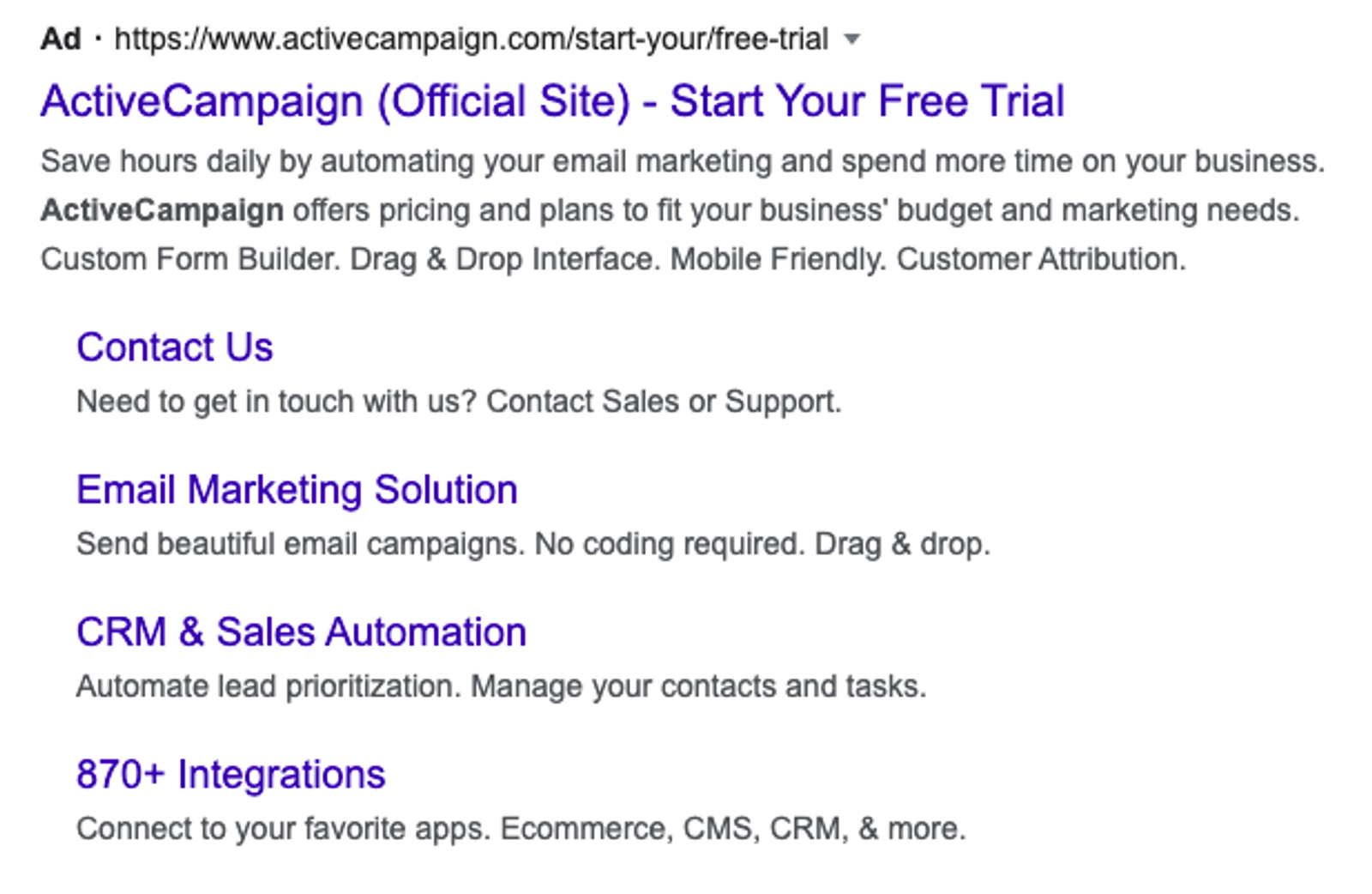

Paid ads

Running A/B tests on your paid ads is incredibly useful. It can tell you how best to structure your ads, what to include, and who you should target. And all of this will help you get the best ROI.

But what exactly can you test with paid ads?

Here are a few elements you can test:

- Headlines: Ad headlines are the first thing users see when they come across your ad, which makes them pretty important. Testing these headlines means you can find out which phrasing works best for your audience.

- Ad copy: This is the actual copy of your ad. To test ad copy, you can tweak the content and see which performs better. For example, you could test a short and sweet ad compared to a long and detailed ad. Take a look at our sponsored ad as an example.

- Targeting: Most social platforms allow you to target ads to a specific audience. A/B testing helps you determine what works best for each audience segment.

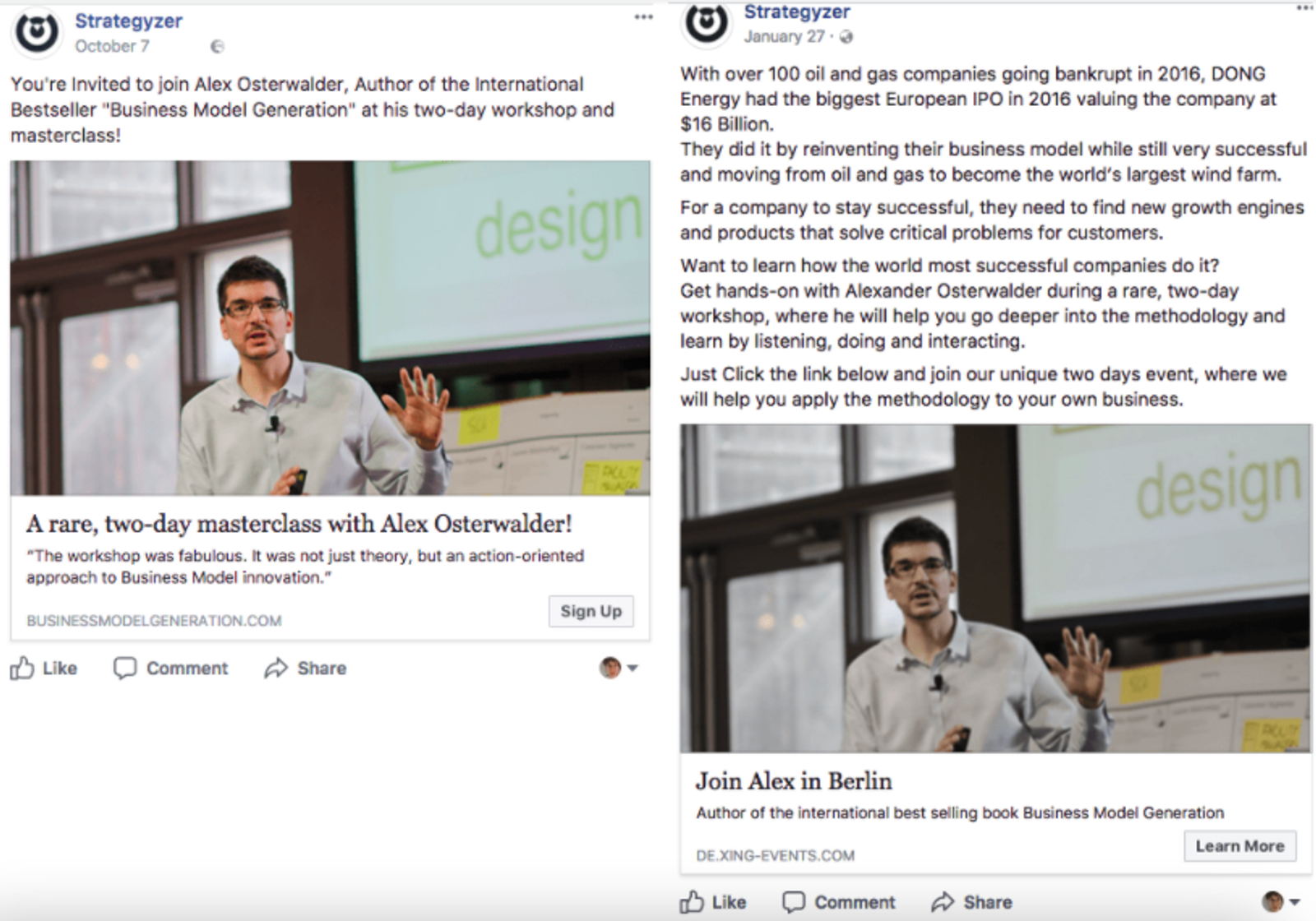

Strategyzer tested a Facebook ad. Their goal was to increase ticket sales for their upcoming event. The variable was the written content of the Facebook ad.

Version A was short and sweet, while version B was more detailed:

The results? Version A got one sale over the course of three weeks, while Version B got 92. The results show that the longer and more detailed copy appealed to their audience.

Landing pages

Optimized landing pages play an important role in driving conversions. However, knowing the best way to structure your landing pages is not always easy. Fortunately, A/B testing allows you to find the structure that works best for your audience.

Here are some of the most popular elements you can test on a landing page:

- Headlines: When a user lands on your website, the headline is one of the first things they see. It needs to be clear, concise, and encourage the user to take action in real time. A/B testing allows you to find the wording that works best for your audience.

- Call-to-action (CTA): CTAs encourage users to engage with your business, usually asking them to provide their contact information or make a purchase. To give yourself the highest chance of landing a conversion, you can test different CTAs to see what performs best. Take a look at our article on types of CTAs for some inspiration.

- Page layout: Your page layout can influence visitor behavior. If your website is tricky to navigate, chances are they won't stick around long. You can split-test a few layouts to find out what works best for your audience.

Brookdale Living used A/B testing on its Find a Community page.

The goal of their split test was to boost conversions from this page. The variables are the page design, layout, and text. They tested their original page (which was very text-heavy) alongside a new page with images and a clear CTA:

The test ran for two months with over 30,000 visitors.

During that time, the second variation increased their website conversion rate by almost 4% and achieved a $100,000 increase in monthly revenue. So it's safe to say the text-heavy approach didn't work for their target audience.

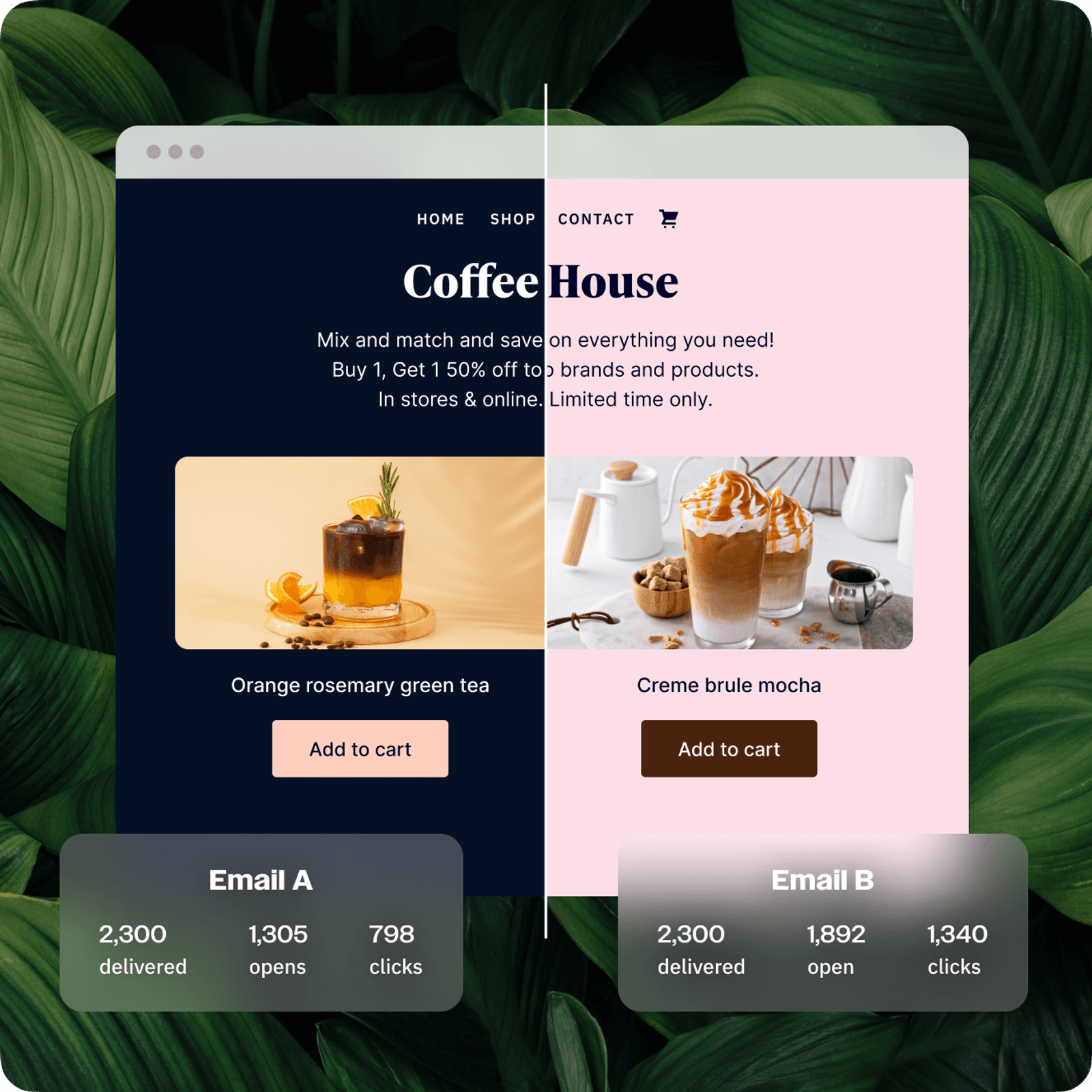

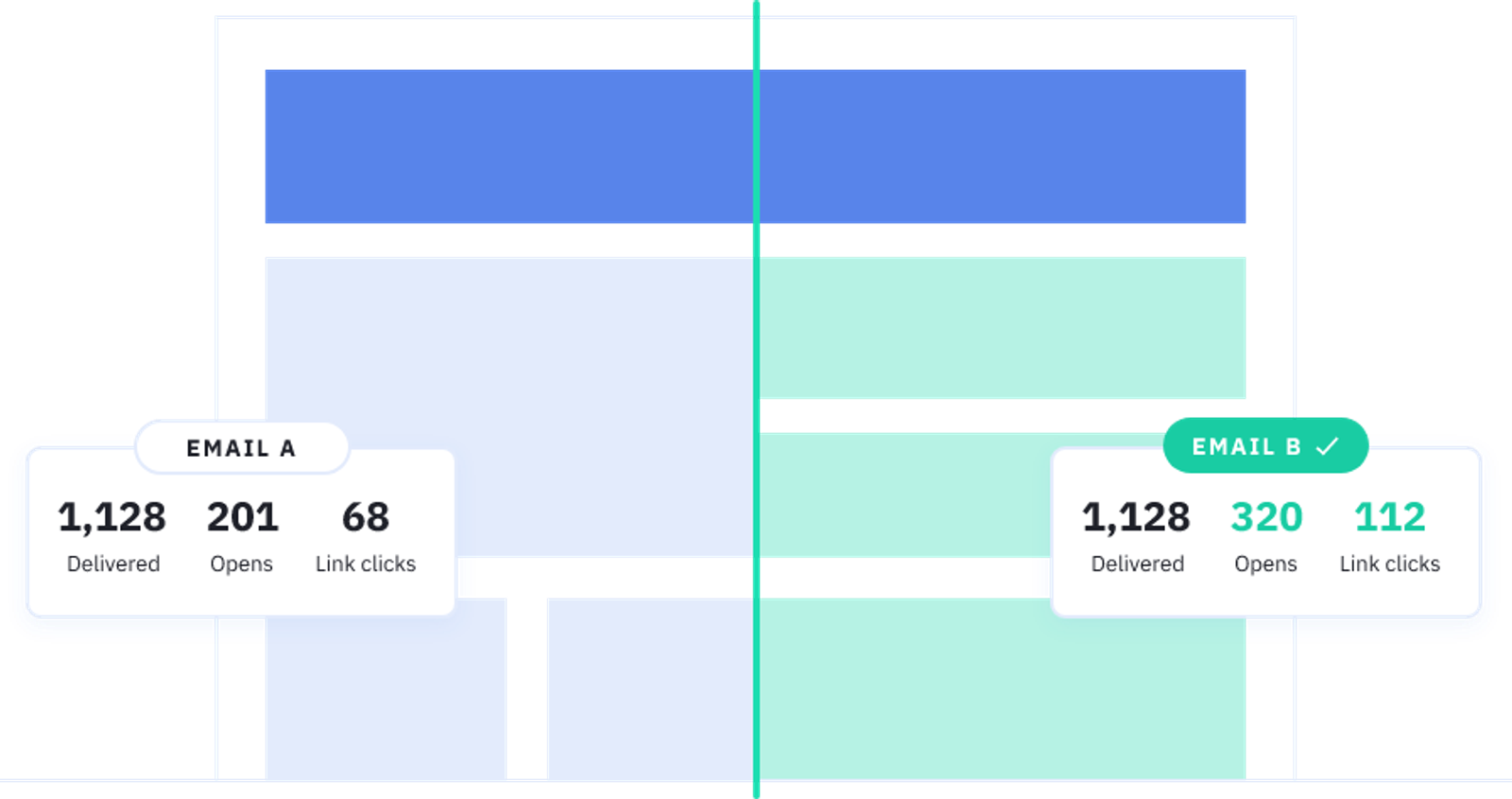

Emails

A/B testing your emails helps you create engaging emails that users actually want to read. And with the number of sent and received emails expected to reach 392.5 billion by 2026, you need all the help you can get to cut through the noise.

Here are a few areas you can test in your emails:

- Subject lines: Your subject line encourages users to open your email, so it needs to be good. Testing what type of subject line works best means you have a higher chance of increasing your open rate and click rate. Take a look at our subject line generator for some inspiration.

- Design: Similar to your landing pages, the design of your email can influence the way your audience engages with it. You can A/B test a few different email templates (including HTML or plain text to find out what works best.

- CTA: Playing around with different types of CTAs, where it’s placed, and the language you use will give you an indication of what works best for your audience.

How to set up and perform A/B testing in 5 simple steps

By now, you're probably wondering how to perform A/B testing so you can improve your conversion rate optimization. To give you a helping hand, we've outlined how to perform A/B testing in five easy steps to optimize any ad, landing page, or email.

1. Determine the goal of your test

First things first, you need to outline your business goals. This will give you a solid hypothesis for A/B testing and help you to stay on track throughout the process.

Not to mention, it helps the overall success of the company. By clearly outlining the goals for your A/B testing, you can be sure that your efforts contribute to the growth and success of the business.

So how do you figure out what your goals should be? The answer is simple.

Ask yourself what you want to learn from the A/B test.

Do you want to increase social media engagement? Improve your website conversion rate? Increase your email open rates? The answers to these questions will tell you what your goals should be.

But whatever you do, don't jump in and start testing random button sizes and colors. Your tests need to have a purpose to make them worthwhile.

2. Identify a variable to test

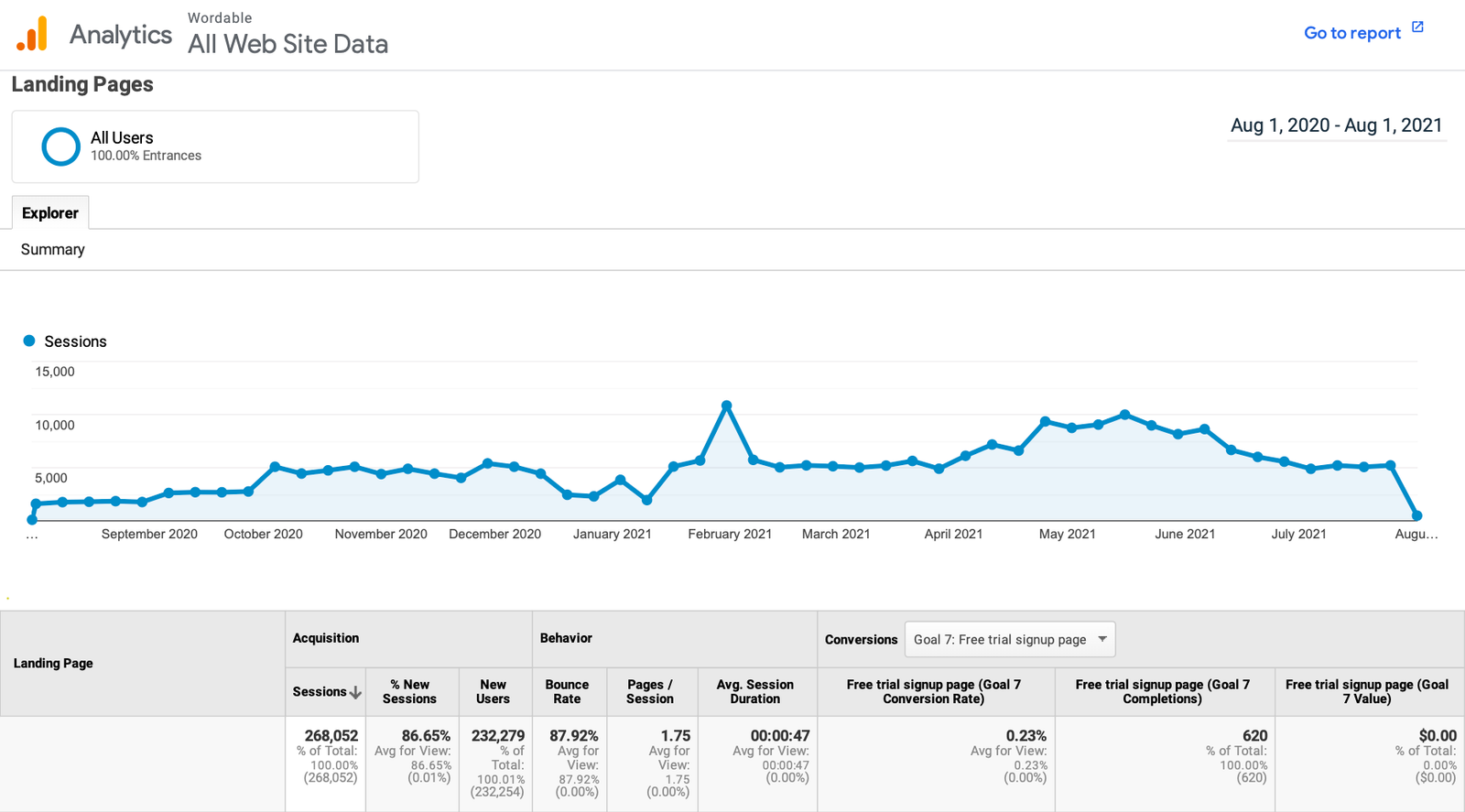

You've outlined your goals. Now, you need to find the right variable to test, which is where data comes in handy. Using past data and analytics, you can identify your underperforming areas and where you need to focus your marketing efforts.

For example, let's say your goal is to improve the user experience on your website. To find the right variable, you review Google Analytics to find the pages with the highest bounce rate.

Once you've narrowed down your search, you can compare these pages with your most successful landing pages. Is there anything different between them? If the answer is yes, this is your variable for testing.

You could also use multivariate testing to test more than one variable. It could be something as simple as a headline, a header image, or the wording on your CTA. This is also your hypothesis: “If we change [X thing], we will increase [goal].” Now you just have to prove yourself right.

3. Use the right testing tool

You need to use the right testing program to make the most of your A/B test.

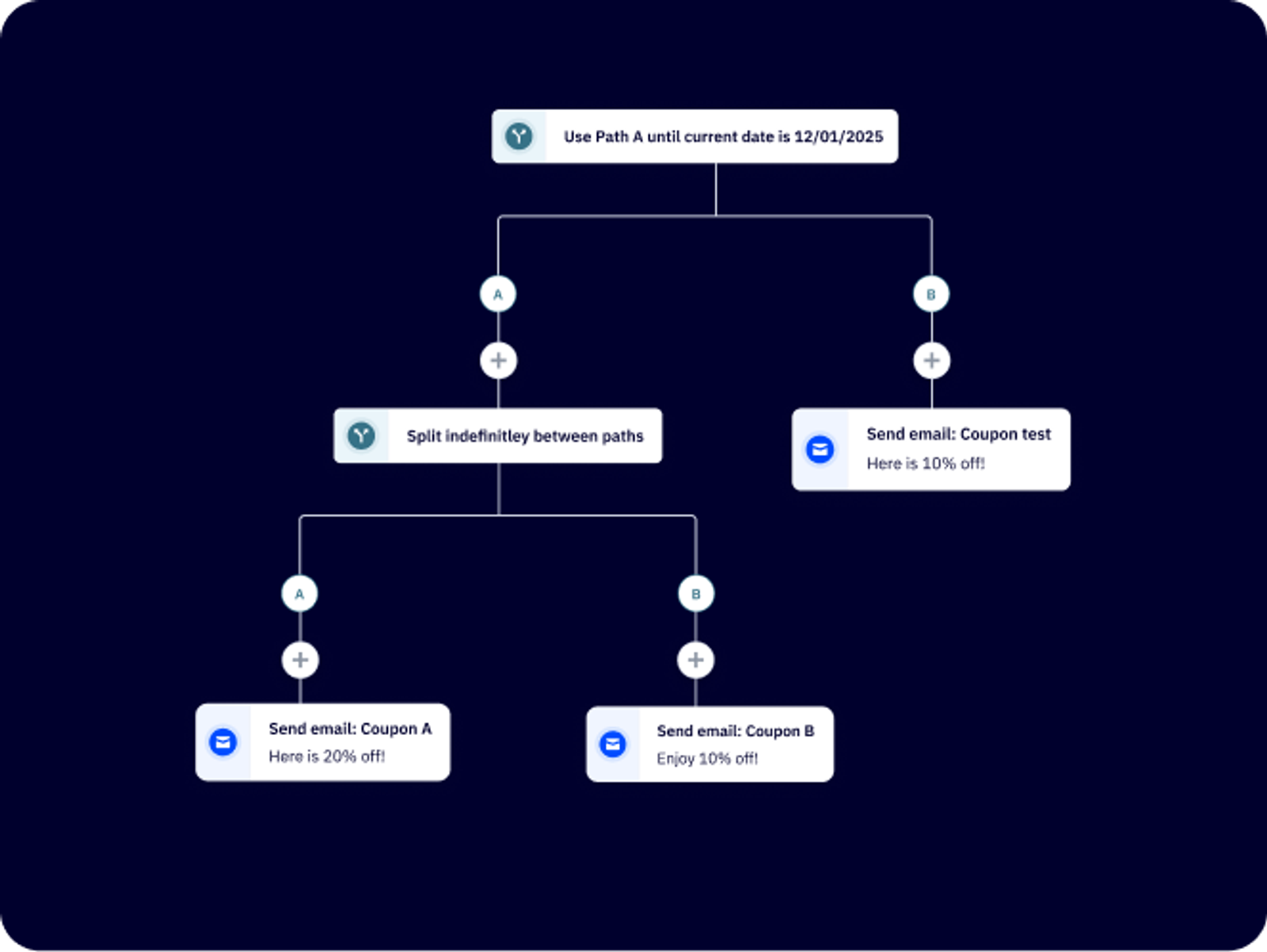

If you want to split-test your emails, a platform like ActiveCampaign is the right choice. Our software is equipped for email testing. You can track your campaigns, automate your split tests, and quickly review the results.

However, not all software is as user-friendly and intuitive as ActiveCampaign.

If you make the wrong choice, you're stuck using a platform that restricts your testing capabilities. As a result, your A/B tests could suffer, leaving you with unreliable results. So make sure you find the testing program that's ideally suited to your A/B test. This makes the entire process more efficient, easier to manage, and it'll help you to make the most out of your testing.

4. Set up your test

Using whatever platform you've chosen, it's time to get things up and running. Unfortunately, we can't give you a step-by-step guide to set up your test because every platform is different.

But we'll advise you to run your A/B tests with a single traffic source (rather than mixing traffic, for example).

Why? Because the results will be more accurate.

You need to compare like for like, making sure to segment your results by traffic source will ensure you review your results with as much clarity as possible.

5. Track and measure the results

Throughout the test duration, you need to continually track the performance. This will allow you to make any changes if the test isn't running as planned. When the test is over, you can measure the results to find the winning variation and review the successes and failures.

At this stage, you can figure out the changes you need to make to improve the customer experience. But if there's little to no difference between your tests (less than a %), you might need to keep it running.

Why? Because you need a bigger dataset to draw conclusions.

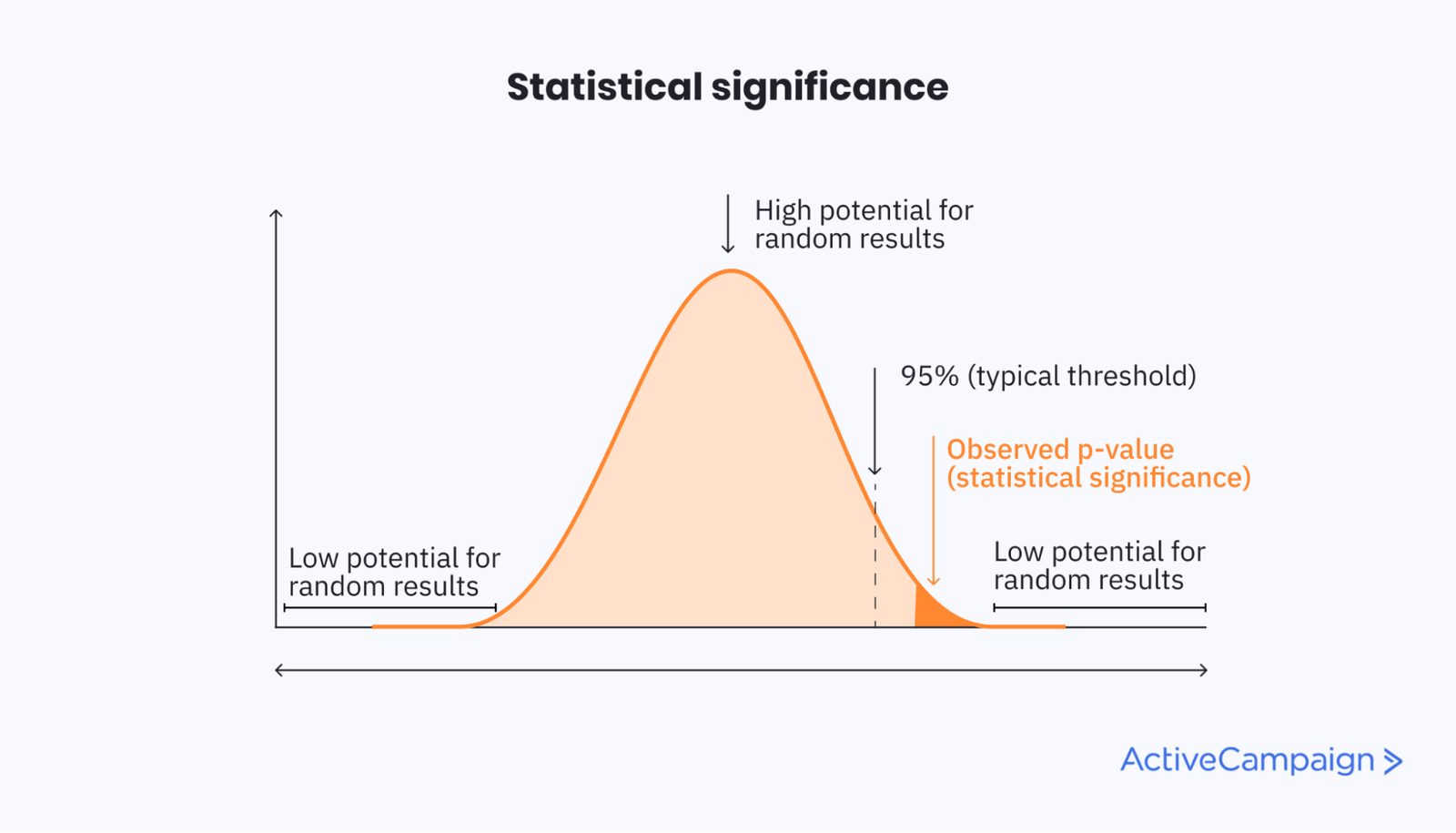

This is where statistical significance comes in handy.

What is statistical significance?

Statistical significance is used to confirm that results from testing don't occur randomly. It's a way of mathematically proving that a particular statistic is reliable. In other words, an A/B test has statistical significance if it isn't caused by chance.

Here's a breakdown of the elements of statistical significance in more detail:

- The P-value: This is the probability value. If there's a small probability that the results occurred by chance, the statistic is reliable. In other words, the smaller the P-value, the more reliable the results (0.05 is standard for confirming statistical significance).

- Sample size: How big is the dataset? If it's too small, the results may not be reliable.

- Confidence level: This is the amount of confidence you have that the test result didn't occur by chance. The typical confidence level for statistical significance is 95%.

Let's use an example to put it into context. Imagine you run an A/B test on your landing page. On your current landing page, your CTA button is red. On the testing page, it's blue. After 1,000 website visits, you get 10 sales from the red button and 11 from the blue button.

Because these results are so similar, there's a high chance the change of color didn't make any difference. This means that it's not statistically significant.

But if the same test returned 10 sales from the red button and 261 sales from the blue button, it's unlikely this occurred by chance. This means it's statistically significant.

If you struggle to identify whether your results are statistically significant, there are platforms out there that can help.

6 important A/B test metrics for marketers to track

There are a few key metrics you can track to reach your goal (whether that be a conversion goal or overall engagement).

Conversion rate

Conversion rate is the percentage of visitors who take a desired action on your website—whether that’s making a purchase, signing up for a newsletter, or filling out a contact form.

You can experiment with different elements like headlines, call-to-action (CTA) buttons, page layouts, and even color schemes to boost it. A/B testing these elements helps you see what resonates most with your audience, making it easier to turn casual visitors into engaged customers. Small tweaks, like adjusting CTA wording or simplifying your checkout process, can make a big impact on your overall conversions.

Website traffic

Website traffic refers to the number of visitors coming to your site, and the more you have, the more opportunities you create for engagement and conversions.

To boost traffic, you can experiment with different elements like blog titles, meta descriptions, homepage headlines, and even featured images. Testing these elements helps you understand what grabs your audience’s attention and encourages them to click through. The better you optimize your content for appeal and visibility (whether through SEO, social media, or email), the more visitors you’ll attract and ultimately convert.

Bounce rate

Bounce rate is the percentage of visitors who leave your website without taking any action, like clicking a link or navigating to another page.

To reduce it, you can experiment with page layouts, loading speeds, CTA placements, and even how much text appears above the fold. Testing one change at a time helps you pinpoint what’s keeping visitors from sticking around. Simple tweaks like making your content easier to scan, adding engaging visuals, or improving site speed can encourage people to explore further instead of bouncing away.

Email open rate

Email open rate measures the percentage of recipients who open your email, making it a key metric for engagement and deliverability.

To improve it, test subject line variations, preview text, sender names, and send times to identify what resonates best with your audience. Personalization, dynamic content, and list segmentation can also drive higher open rates by making emails more relevant. Avoiding spam triggers and maintaining a clean list helps ensure your emails actually land in inboxes.

Click-through rate

Click-through rate (CTR) measures the percentage of email recipients who click on a link within your email, showing how effective your content and CTAs are at driving engagement.

To boost CTR, experiment with CTA placement, button design, anchor text, and even the length of your email copy. A/B testing different messaging styles—concise vs. detailed, direct vs. conversational—can also reveal what resonates best. Personalization and dynamic content help make links more relevant, while mobile-friendly formatting ensures a seamless experience.

Revenue per visitor

Revenue per visitor (RPV) measures how much revenue each website visitor generates on average, giving you insight into the effectiveness of your marketing and conversion strategies.

To increase RPV, focus on optimizing your email-driven traffic by improving segmentation, personalizing offers, and refining your post-click experience. A/B test product recommendations, discount strategies, and checkout flows to see what drives higher average order values.

Additionally, fine-tuning your email frequency and targeting can ensure you attract high-intent visitors who are more likely to convert and spend more.

How to read and interpret A/B test results

You may use a variety of testing tools, like marketing automation or lead scoring software, that help you read data and find the insights that'll help you make informed decisions. Still, it's helpful to know how to read your test results, so let's look at how you can do this independently.

- Look at your unique goal metric: This is most likely your conversion rate. If you're using a split testing tool or calculator, you'll have two separate results for each element you're testing.

- Compare: Typically, you'll have one test that is the clear winner, but there are times when the results are too close to determine anything. This is called statistical significance, which we talked about earlier. If your results are neck and neck, you don't have a test version that will significantly improve your conversion rate. This will take you right back to the start, where you'll need to test another element.

- Segment further for more information: A valuable way to get more insight from your test is to segment even further. This could mean looking at where the clicks came from (blog, social, website, ad), who your visitors were (new prospects or existing customers), and the avenue they viewed your content on (desktop or mobile). Breaking these down will help you understand your test on a deeper level so you can target the right people in your next campaign.

Remember to conduct your own A/B tests

All of these examples show the success stories behind A/B testing. But just because these tests worked for these businesses doesn't mean the same tests will work for yours.

You'll need to do your own testing to determine what your audience wants. You can scroll back up to our ‘What can you A/B test?' section to learn more about testing paid ads.

Drive better results with A/B testing in ActiveCampaign

With ActiveCampaign, the built-in A/B testing features aren’t only easy to use, they’re designed to drive results.

Smarter A/B testing

With ActiveCampaign’s built-in A/B testing inside automated workflows, you’re not just guessing what works, you’re making data-backed decisions at scale. Test different subject lines, email content, send times, and even entire automation paths within a single workflow.

ActiveCampaign dynamically splits traffic, ensuring clean, actionable results that show exactly what moves the needle.

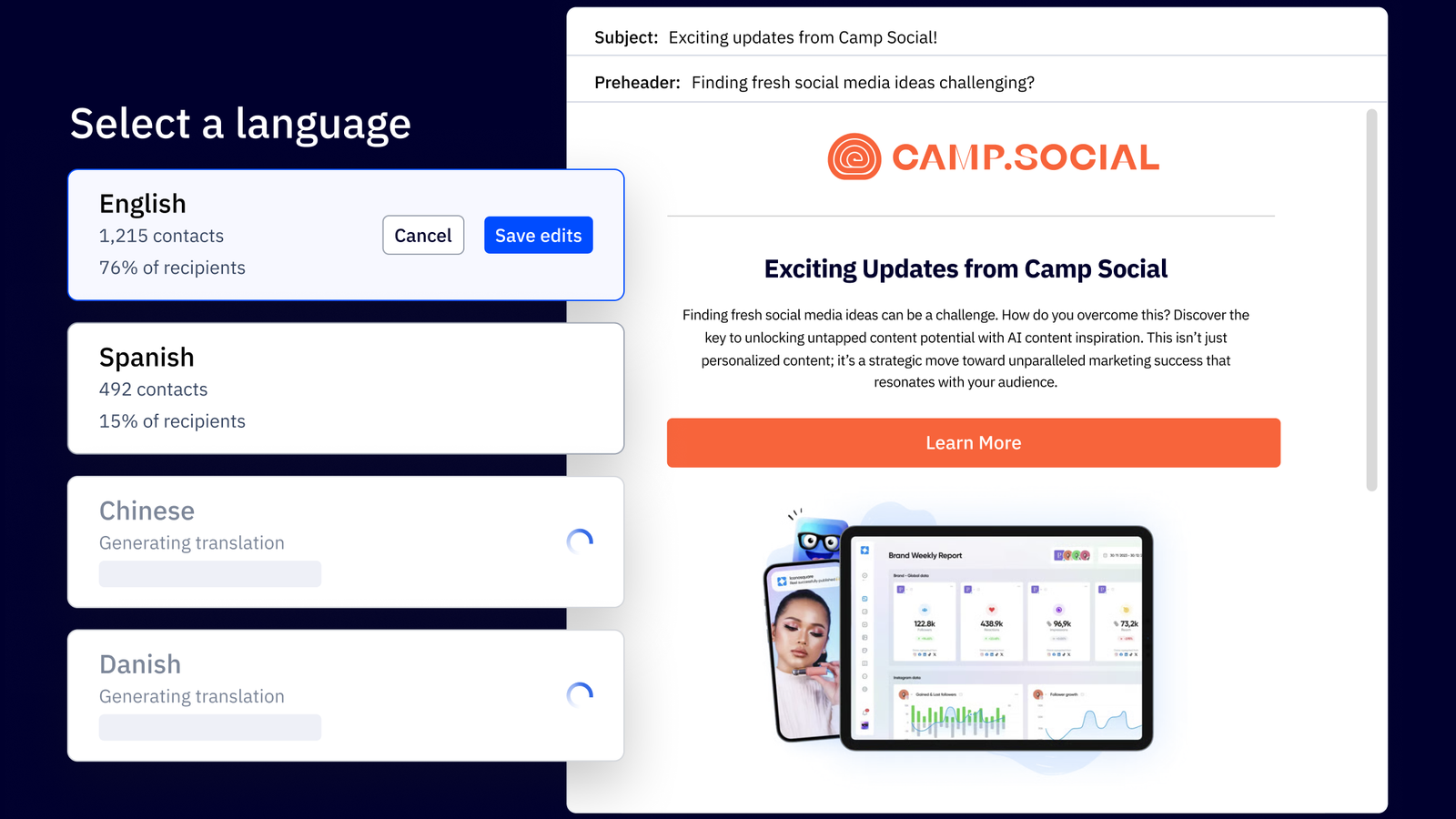

Hyper-personalized variations for deeper insights

ActiveCampaign takes A/B testing further with segmentation and dynamic content. Instead of generic split tests, you can personalize each variation based on contact behavior, preferences, or lifecycle stage. This means you’re not just finding what works in general, you’re discovering what works best for each audience segment, giving you more precise, high-impact results.

Real-time reporting for instant optimization

The moment you launch a test, real-time reporting kicks in. See which variation is driving the most opens, clicks, and conversions without waiting weeks for insights. Once a winner emerges, you can automatically shift traffic to the best-performing version or apply those learnings across emails, landing pages, and even ad campaigns.

With ActiveCampaign, A/B testing isn’t just an experiment; it’s a scalable strategy for optimizing every touchpoint in your marketing funnel.

Ready to start?

A/B testing FAQs

Lingering questions? We’ve got you.

What is statistical significance in split testing?

Statistical significance in split testing determines whether the differences observed between two variations are likely due to the changes made rather than random chance. Achieving statistical significance ensures that the results of A/B tests (such as comparing two subject lines or call-to-action buttons) are reliable and can confidently inform future campaign decisions.

For example, if one email version yields a 5% response rate and another 3%, statistical significance testing helps ascertain if this 2% difference is meaningful or merely coincidental.

How do you determine the sample size needed for an A/B test?

To determine the baseline sample size for an A/B test in email marketing, follow these steps:

- Current conversion rate: Know your baseline (e.g., 20% click-through rate).

- Desired improvement: Decide on the smallest difference you want to detect (e.g., a 5% increase).

- Confidence level: Aim for 95% confidence (standard for A/B tests).

- Prioritize statistical power: Set it to 80% or higher to avoid false negatives.

With these factors, you can use online calculators to find how many recipients you need per test group. For example, with a 20% conversion rate and a 5% improvement goal, you might need around 1,000 recipients per version. This ensures your test results are reliable and useful.

How long should an A/B test run to achieve reliable results?

To achieve reliable results in your A/B email tests, aim to run them for a baseline of at least 24 to 48 hours. This timeframe helps capture a representative sample of your audience's behavior, accounting for variations in open times and engagement patterns.

One study found that a 24-hour test duration yielded actionable insights, with a sample size of 6,600 contacts and a 13.2% test group participation rate.